Data

Nanohistory is designed to allow scholars to integrate their history-as-data with existing Linked Open Data resources. You can harvest information from existing online Linked Open Data resources like OCLC, Geonames, VIAF, DBpedia or Europeana. Or you can manually add data, or upload archival findings using Import Templates. Binding ongoing scholarly work with the Linked Open Data world allows users to critique open data sources as part of their on going expert research. This not only allows it to introduce new findings into the world of Linked Open Data, but gives experts a way to critique the Open Data we already have by refining it. It closes the critical loop, making researchers not only consumers of Open Data, but producers, publishing their data into the Linked Open Data world.

An essential element of this functionality is the growing number of Identifier Types that permit easy linking of online resources for Nanohistory entities and records.

Linked Open Data

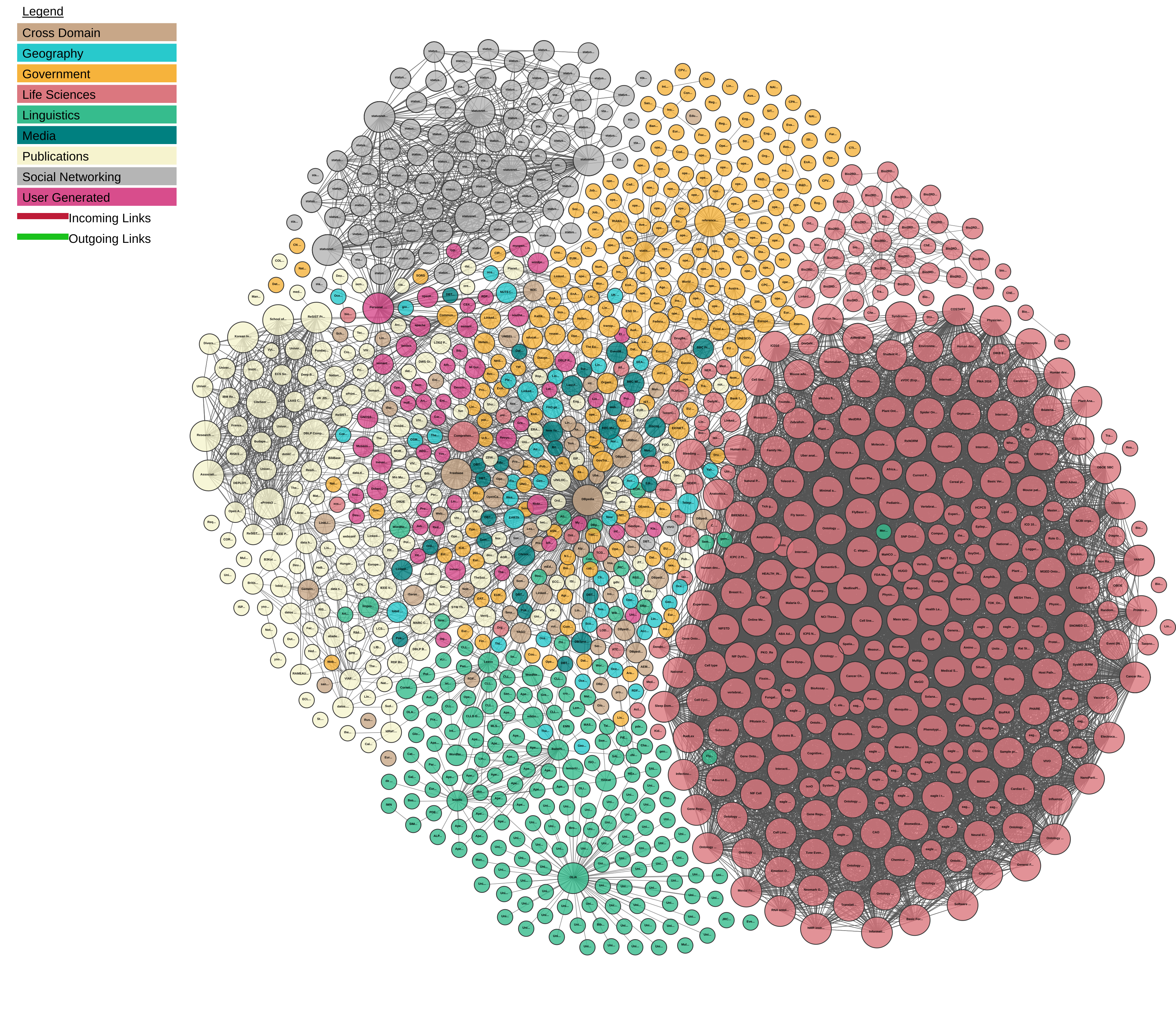

For many the next stage of the internet is a web that acts more like a vast database than a series of separate pages. Whether we call this the 'semantic web' is besides the point - we're in an age where human interaction on the web is quickly being overtaken by machine to machine forms of exchange and dissemination. Standardized formatting is key to these transactions, and while there are numerous standards, several types of data stand out: JSON and XML, in particular, RDF XML. When this data is freely accessible, and employs standard descriptors or vocabularies (called namespaces), it is Open Data. In cases where data references other resources or data on another machine, it's linked. Linked Open Data is often referenced alongside the Research Description Framework, or RDF, which is a method of referencing data and describing it to make it interoperable using namespaces and a particular format: the triple (subject->predicate->object). Linked Open Data is a vast topic; it is enough to say here that Nanohistory interfaces with many Open Data resources, like DBPedia.org and Freebase, as well as more specific resources like VIAF, OCLC, and Geonames, to allow users to harvest and use the information that already exists online. Importantly, it also allows the editing of this data by individual users, a long-standing critique of the Open Data architecture. Nanohistory is designed to interface the Linked Open Data Cloud.

For many the next stage of the internet is a web that acts more like a vast database than a series of separate pages. Whether we call this the 'semantic web' is besides the point - we're in an age where human interaction on the web is quickly being overtaken by machine to machine forms of exchange and dissemination. Standardized formatting is key to these transactions, and while there are numerous standards, several types of data stand out: JSON and XML, in particular, RDF XML. When this data is freely accessible, and employs standard descriptors or vocabularies (called namespaces), it is Open Data. In cases where data references other resources or data on another machine, it's linked. Linked Open Data is often referenced alongside the Research Description Framework, or RDF, which is a method of referencing data and describing it to make it interoperable using namespaces and a particular format: the triple (subject->predicate->object). Linked Open Data is a vast topic; it is enough to say here that Nanohistory interfaces with many Open Data resources, like DBPedia.org and Freebase, as well as more specific resources like VIAF, OCLC, and Geonames, to allow users to harvest and use the information that already exists online. Importantly, it also allows the editing of this data by individual users, a long-standing critique of the Open Data architecture. Nanohistory is designed to interface the Linked Open Data Cloud.Archival Data

Scholars produce data all the time; it's lies at the core of their research. Yet humanities scholars often don't think of their work as 'data' per se, or necessarily structure it in ways that would work best for another scholar. Humanities data is heterogeneous and messy, and perhaps that's the point. Importantly, however, a humanities researcher might be only one of a handful of individuals who have seen, used, and fully comprehend the value of an archival source or holding. The data that they produce through traditional and meticulous research methods is invaluable, yet often lies locked and inaccessible in key ways: in the heterogeneous idiosyncractic messiness of private research notes or data collections, or embedded within the prose of academic publications. Nanohistory calls humanities scholars to participate in the communal project of contributing the basics of their archival research to the building and critique of Open Data. Archival works contain a wealth of information and data that is only paralleled by the amount of data produced by social media. Most archives do not have the funding to digitize, let alone document, unstructured data. Yet Nanohistory allows its transformation into Open Data by giving individual scholars the means to collate archival data, and enrich it with existing Open Data sources. This kind of combining or mashing up of Open and Archival data, and even what we might hold as the automated processes of big data, with the manual labour of an individual scholar huddled away in a county or society archive, lies at the heart of Nanohistory's interests.